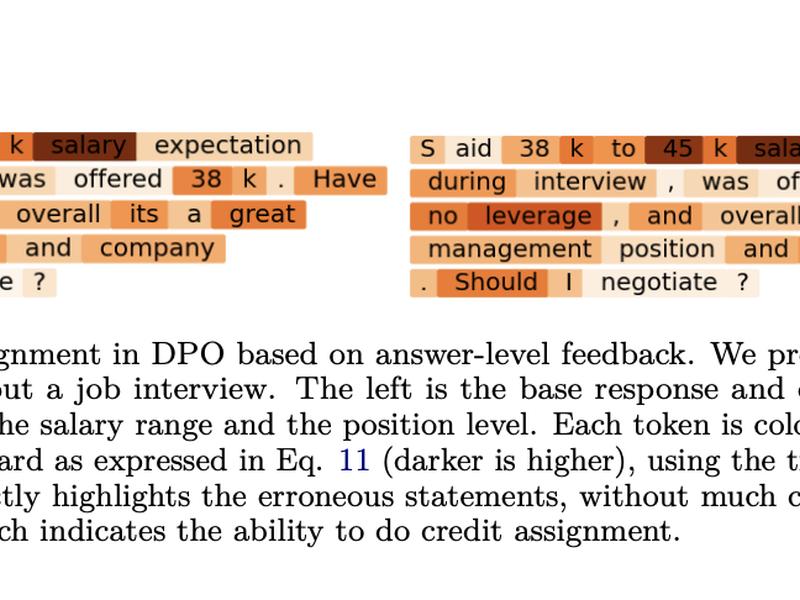

Stanford researchers have introduced Direct Preference Optimization (DPO), a streamlined method for training large language models (LLMs) that simplifies reinforcement learning and enables finer control over language generation capabilities. This approach eliminates the need for separate reward learning and enhances the quality and adaptability of model responses.