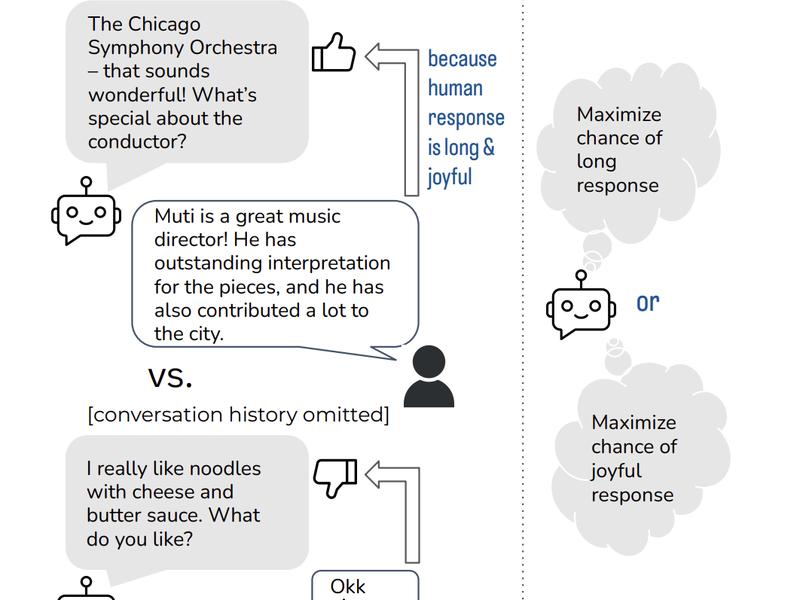

This article examines the use of implicit feedback signals from natural user discussions to improve dialogue models in reinforcement learning with human feedback. Researchers from New York University and Meta AI use publicly available, de-identified data from the BlenderBot online deployment to investigate this problem. Their novel models are found to be superior to the baseline replies through both automated and human judgments.

Previous Article5 Python Libraries To Interpret Machine Learning Models

Next Article Artificial Intelligence Has No Reason To Harm Us