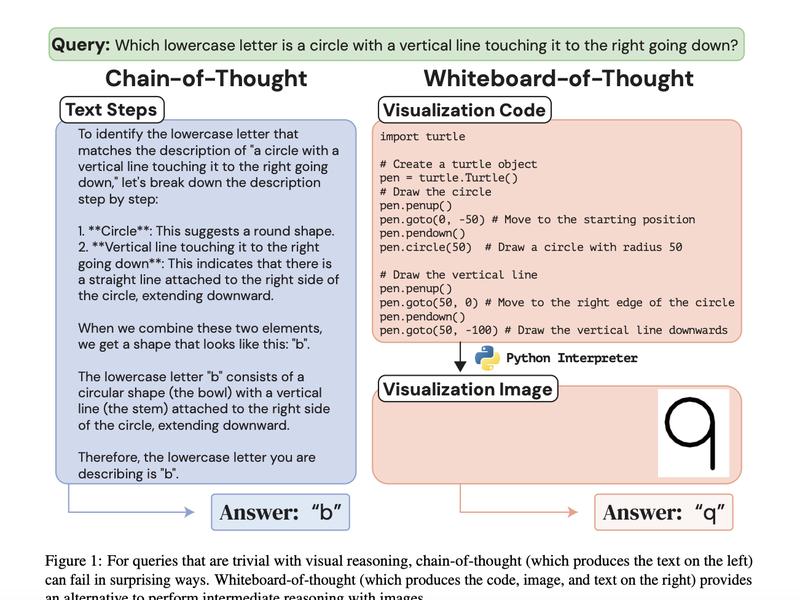

This article discusses the limitations of large language models (LLMs) in tasks that require visual and spatial reasoning and explores various approaches to improve their performance. These include chain-of-thought prompting, tool usage and code augmentation, and the use of whiteboards to enhance the visual reasoning abilities of multimodal large language models (MLLMs). Researchers from Columbia University have proposed a new approach called Whiteboard-of-Thought (WoT) prompting, which allows MLLMs to draw out reasoning steps as images.