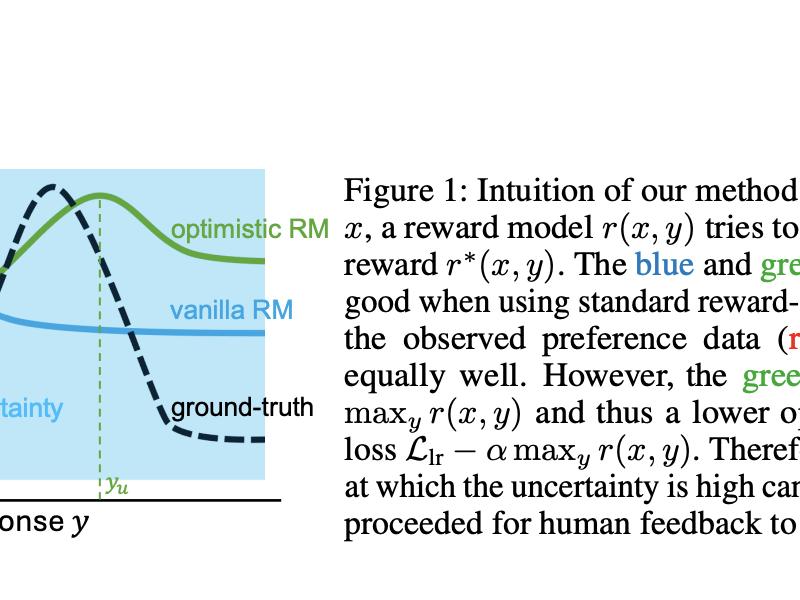

Large Language Models (LLMs) have advanced with the use of Reinforcement Learning from Human Feedback (RLHF) to optimize a reward function based on human preferences for prompt-response pairs. This alignment can be done through offline or online methods, with online alignment being more effective in exploring out-of-distribution regions. Preference optimization has shown great effectiveness in bringing LLMs into alignment with human goals.