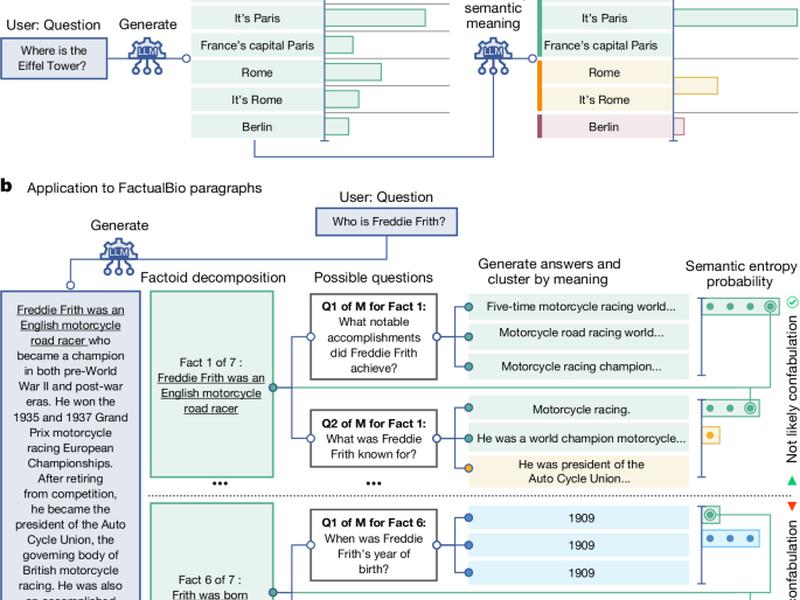

This article discusses the use of semantic entropy as a strategy for overcoming confabulation in language-based machine learning problems. The authors propose a method that adapts classical probabilistic machine learning techniques to address the unique properties of modern language models. They also introduce the concept of predictive entropy as a measure of uncertainty in the output distribution of a language model. This approach has the potential to improve the accuracy and reliability of language-based AI systems.