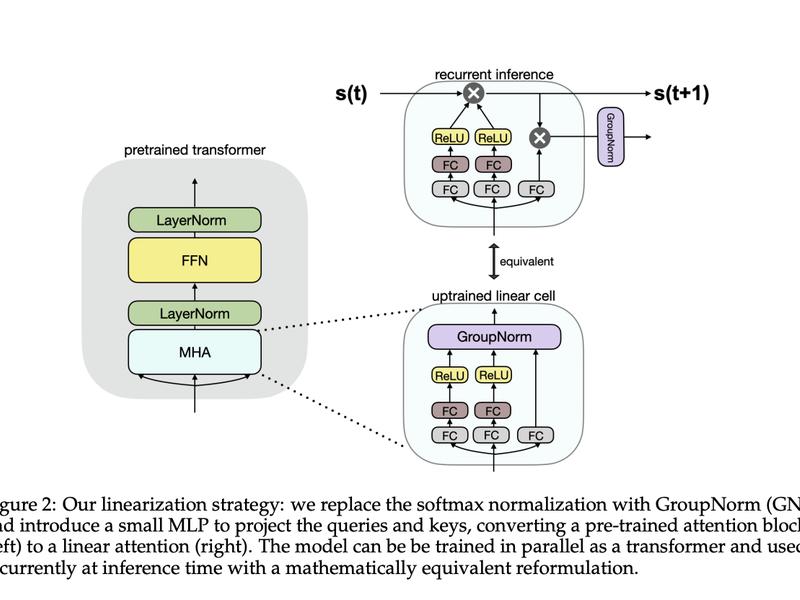

NLP has advanced with transformer models, but their high memory and processing requirements pose challenges. Researchers are exploring more efficient alternatives, such as Linear Transformers, state-space models, and SUPRA, which converts pre-trained transformers into RNNs.