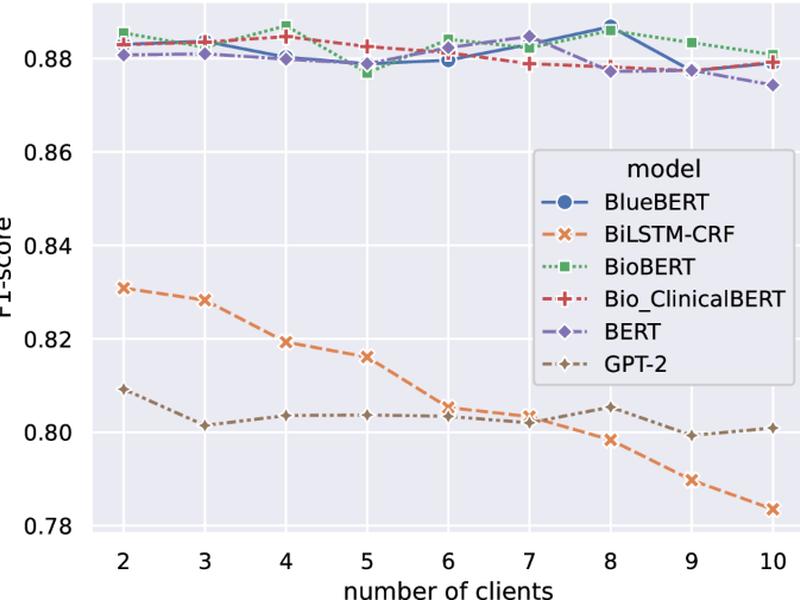

The article discusses the use of federated learning (FL) as a decentralized solution for training language models (LMs) in the medical field, where data access and privacy constraints are prevalent. The study shows that FL models outperform models trained on individual clients’ data and sometimes perform comparably with models trained with pooled data. Additionally, FL models significantly outperform pre-trained LMs with few-shot prompting.