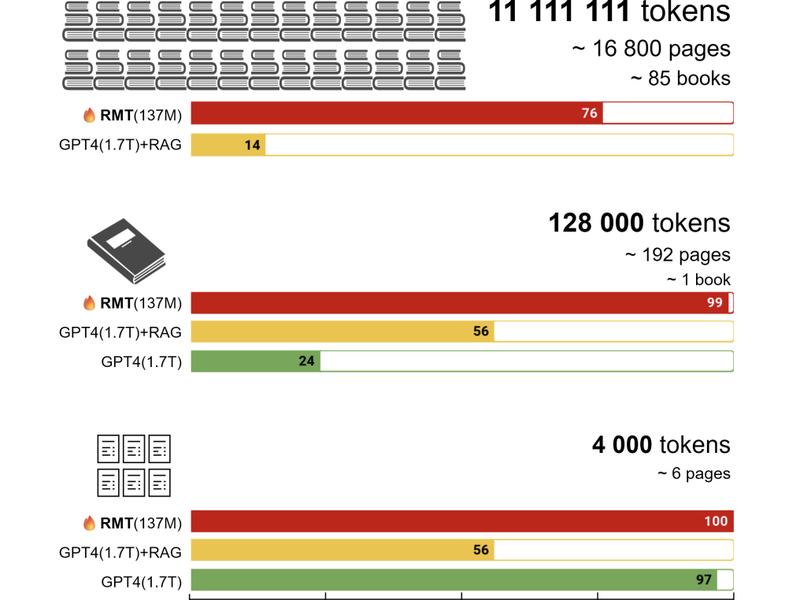

Recent research has presented a viable method for expanding context windows in transformers with the use of recurrent memory, resulting in the BABILong framework for testing NLP models on processing lengthy documents. This framework aims to assess how well generative models manage lengthy contexts and improve upon the bAbI benchmark for assessing reasoning abilities.

Previous ArticleRevolutionizing Cloud Solutions: Introducing Cloud Binary Server

Next Article Empowering Problem-solvers With Ai Technology