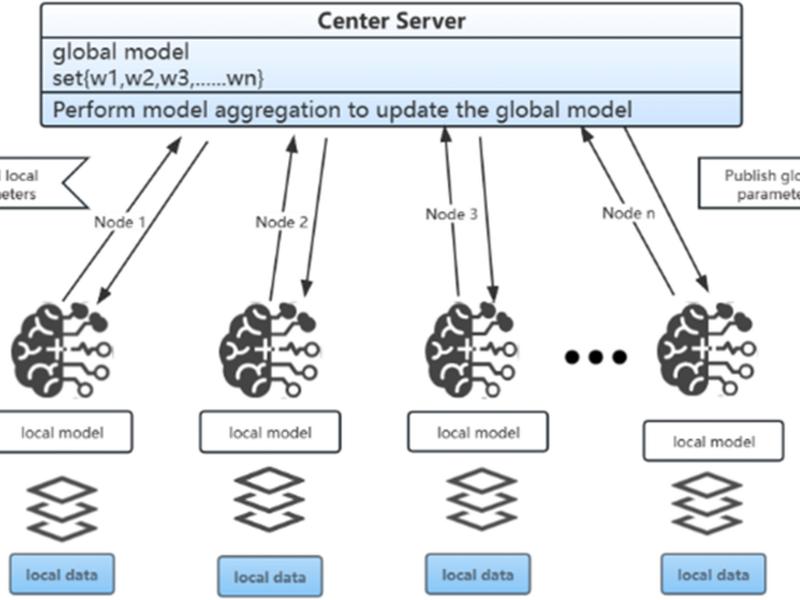

This paper proposes a two-layer accumulated quantized compression algorithm (TLAQC) to reduce the communication cost of federated learning. TLAQC introduces a revised quantization method called RQSGD, which employs zero-value correction to mitigate ineffective quantization phenomena and minimize average quantization errors. Additionally, TLAQC reduces the frequency of gradient information uploads through an adaptive threshold and parameter self-inspection mechanism, further reducing communication costs. Experimental results demonstrate that RQSGD achieves an incidence of ineffective quantization as low as 0.003% and reduces the average quantization error to 1.6 × . Compared to full-precision FedAVG, TLAQC compresses uploaded traffic to only 6.73% while increasing accuracy by 1.25%.