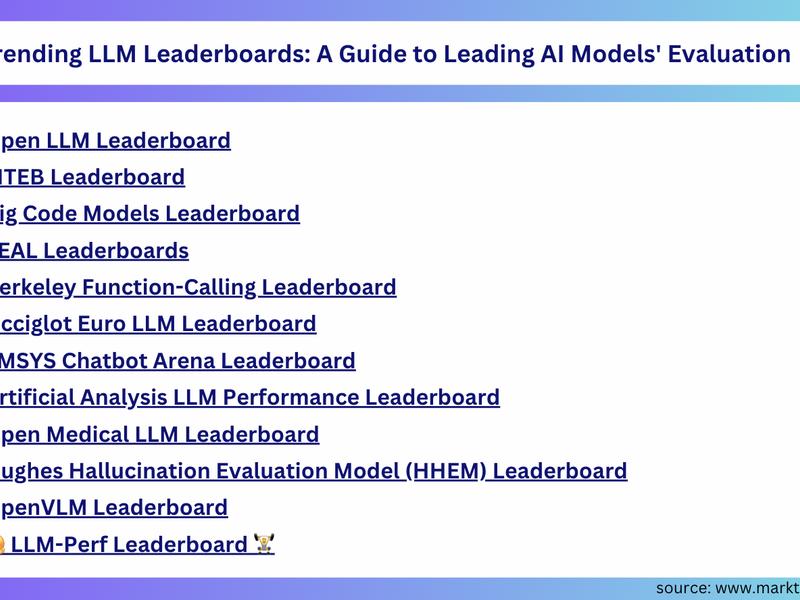

The article discusses three different leaderboards that evaluate the performance of various AI models in different tasks. The Open LLM Leaderboard focuses on benchmarking models across six tasks, the MTEB Leaderboard evaluates text embeddings on eight tasks and 58 datasets, and the Big Code Models Leaderboard compares multilingual code generation models on two benchmarks. The findings from these leaderboards highlight the need for further development in the field of AI and suggest that no single method excels across all tasks.

Previous ArticleAi Platform Makes Microscopy Image Analysis More Accessible

Next Article Business Moves