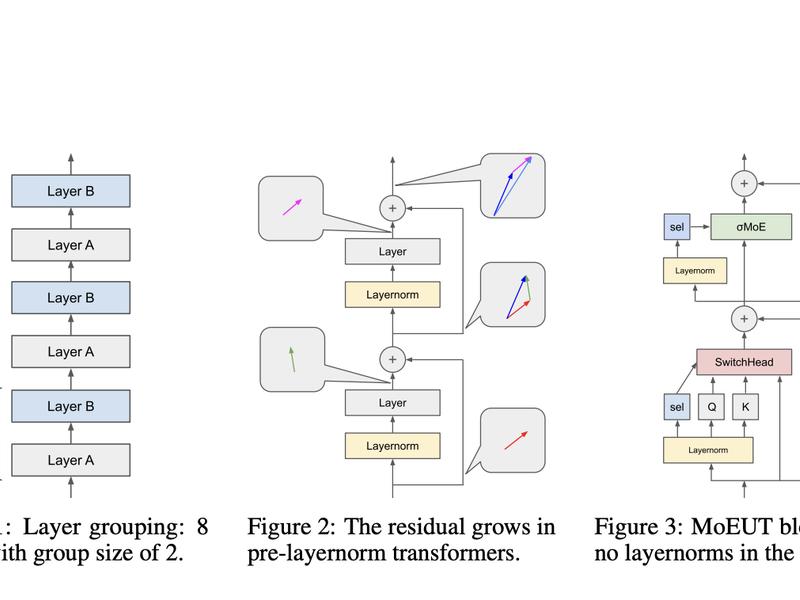

Researchers have developed a new architecture, Mixture-of-Experts Universal Transformers (MoEUTs), to address the efficiency issues faced by Universal Transformers (UTs). MoEUTs utilize a mixture-of-experts approach and introduce innovations such as layer grouping and peri-layernorm to improve performance on parameter-heavy tasks like language modeling. This new architecture outperforms standard Transformers with fewer resources on various datasets.