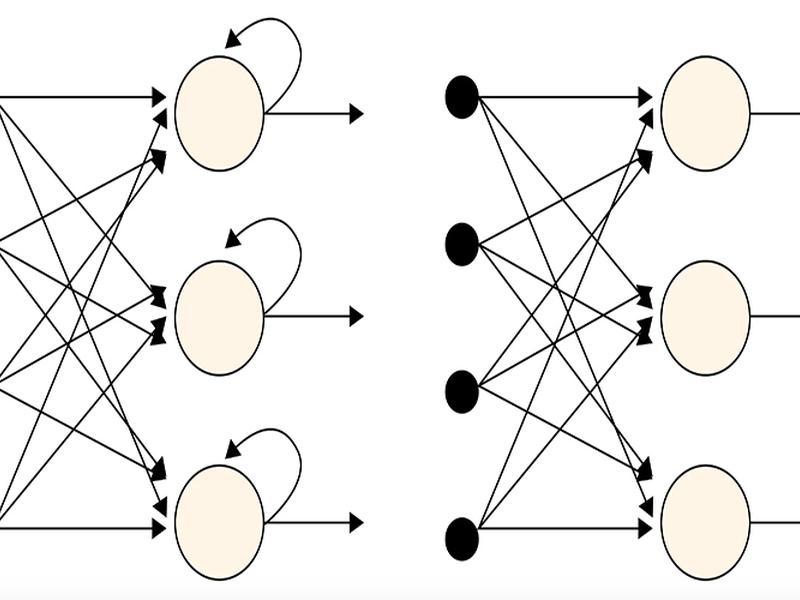

This article discusses the differences between recurrent neural networks (RNNs) and feed-forward neural networks (FFNNs), and how RNNs use backpropagation through time (BPTT) and long short-term memory (LSTM) to handle information and make predictions. It also explores the various types of weights used in RNNs and the applications of RNNs in deep learning tasks such as natural language processing and financial predictions.