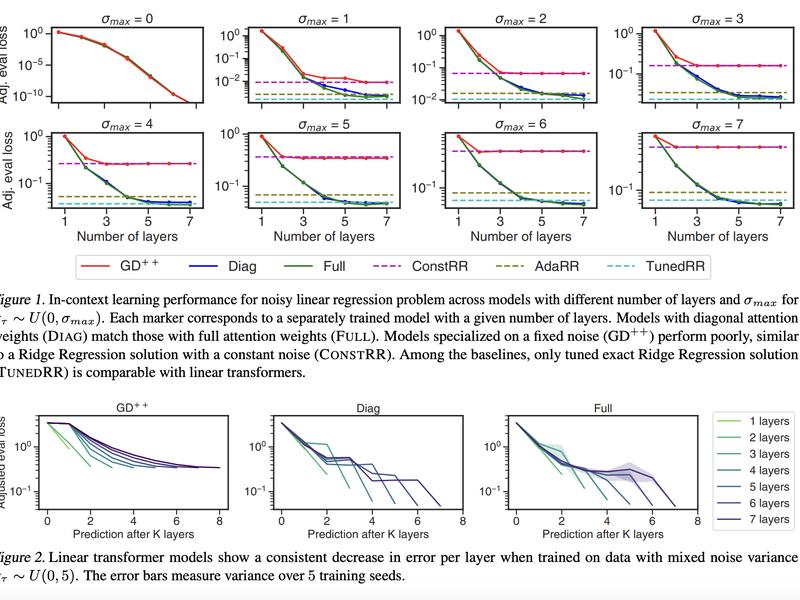

A new model class, linear transformers, has been developed by researchers from Google Research and Duke University to address the challenges of dealing with noisy and complex data. These models employ linear self-attention layers and can perform gradient-based optimization during the forward inference step, showcasing an unprecedented level of versatility and efficiency.