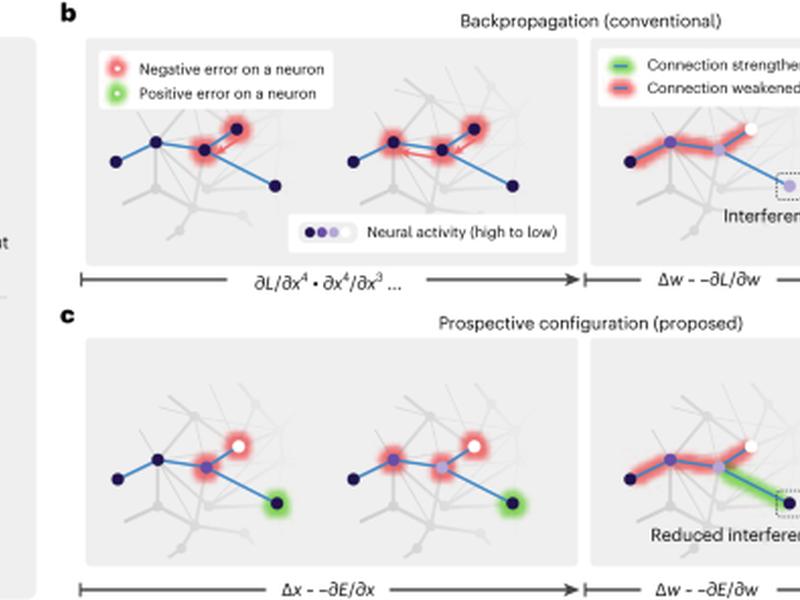

This article discusses the difference between backpropagation and prospective configuration, two methods of adjusting neural networks to better predict outcomes. Backpropagation adjusts the weights of the network to minimize the error on the output, while prospective configuration adjusts the neural activity of the network to better predict the observed outcome. This article provides an example of a bear seeing a river and expecting to hear water and smell salmon, but only smelling the salmon. It explains how backpropagation would adjust the weights between the visual and auditory neurons to reduce the error, while prospective configuration would adjust the neural activity of the network to better predict the observed outcome.