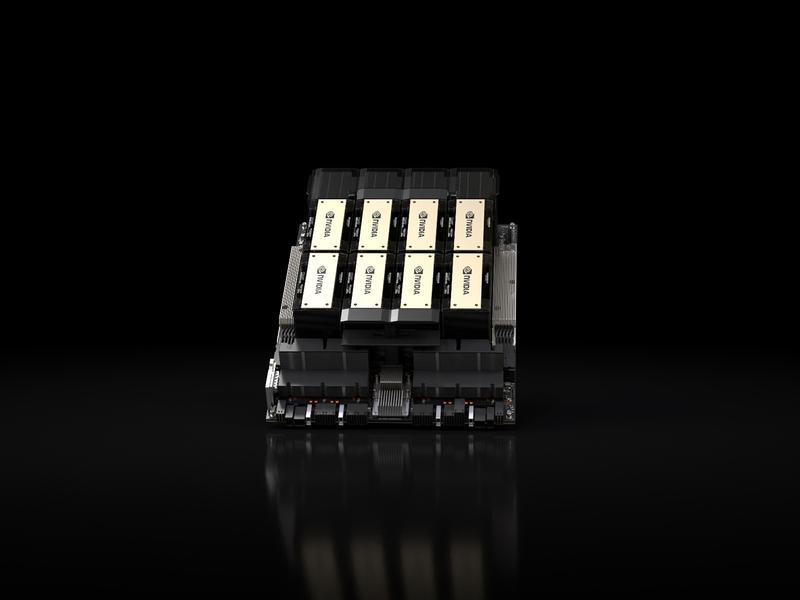

Nvidia Corp. has announced the introduction of the HGX H200 computing platform, a powerful system that features the upcoming H200 Tensor Core graphics processing unit based on its Hopper architecture, with advanced memory to handle the massive amounts of data needed for artificial intelligence and supercomputing workloads. The H200 includes a new feature called the Transformer Engine designed to speed up natural language processing models, and has more than 141 gigabytes of memory at 4.8 terabits per second. According to Nvidia, the H200 provides 1.6 times the performance of the 175 billion-parameter GPT-3 model versus the H100 and 1.9 times the performance of the 70 billion-parameter Llama 2 model compared with the H100.