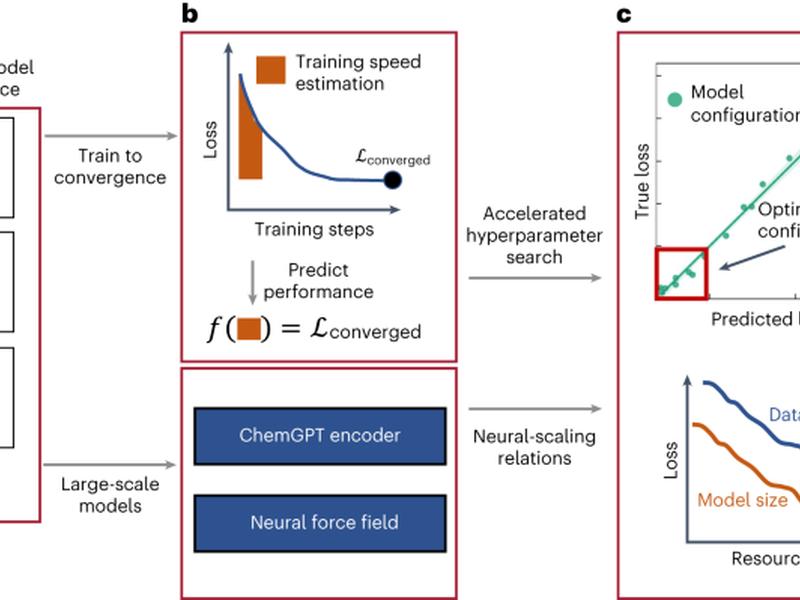

This article discusses strategies for scaling deep chemical models and investigates neural-scaling behaviour in large language models (LLMs) and graph neural networks (GNNs) for generative chemical modelling and machine-learned interatomic potentials. It introduces ChemGPT, a generative pre-trained transformer for autoregressive language modelling of small molecules, and examines large, invariant and equivariant GNNs trained on trajectories from molecular dynamics. It also explores techniques for accelerating neural architecture search to reduce total time and compute budgets. Finally, it identifies trends in chemical model scaling with respect to model capacity and dataset size.