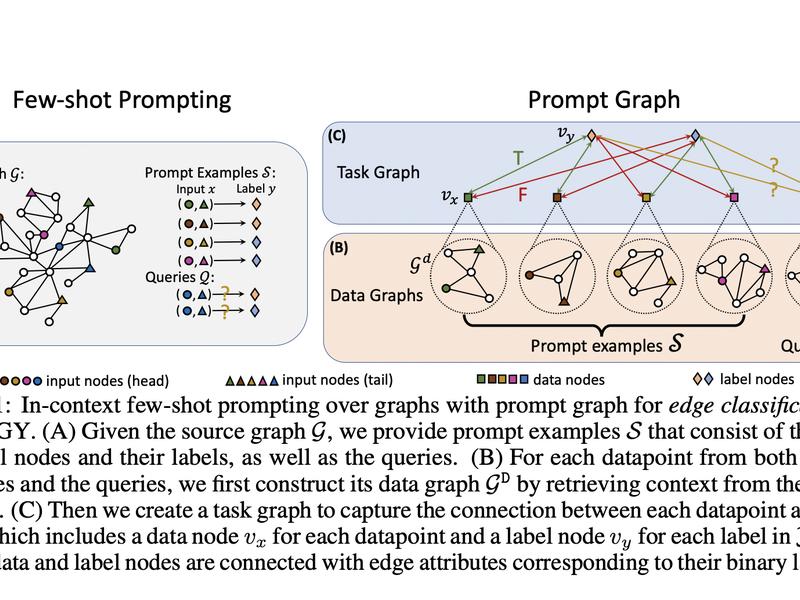

Researchers from Stanford have recently proposed a new pretraining framework called PRODIGY, which enables in-context learning over graphs. PRODIGY formulates in-context learning over graphs using prompt graph representation, which integrates the modeling of nodes, edges, and graph-level machine learning tasks. This interconnected representation allows diverse graph machine-learning tasks to be specified to the same model, irrespective of the size of the graph.