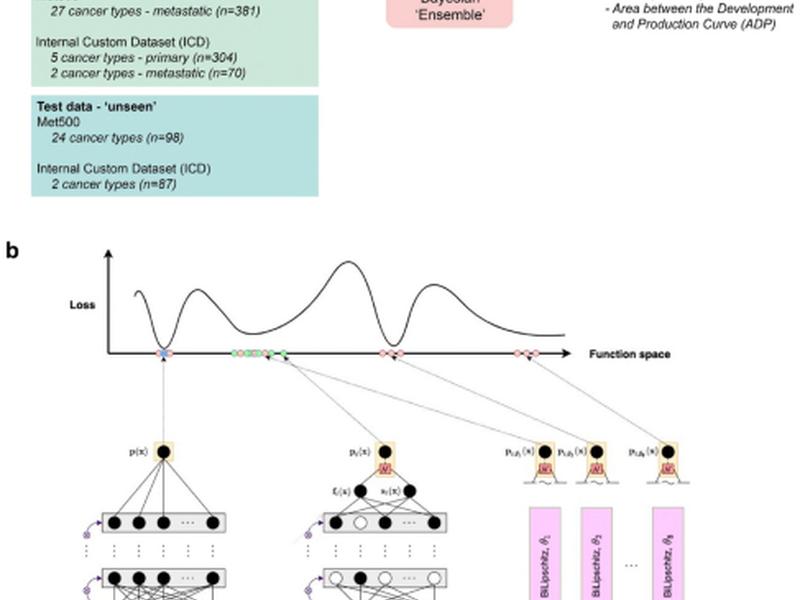

This article discusses a study that focused on quantifying and improving robustness to shift-induced overconfidence with simple and accessible deep learning (DL) methods in the context of oncology. The study used three independent datasets and four presumed hidden variables to proxy different distribution shifts. A new metric, the ADP, was designed to encapsulate shift-induced overconfidence and is directly interpretable as a proxy to expected accuracy loss when deploying DL models from development to production.