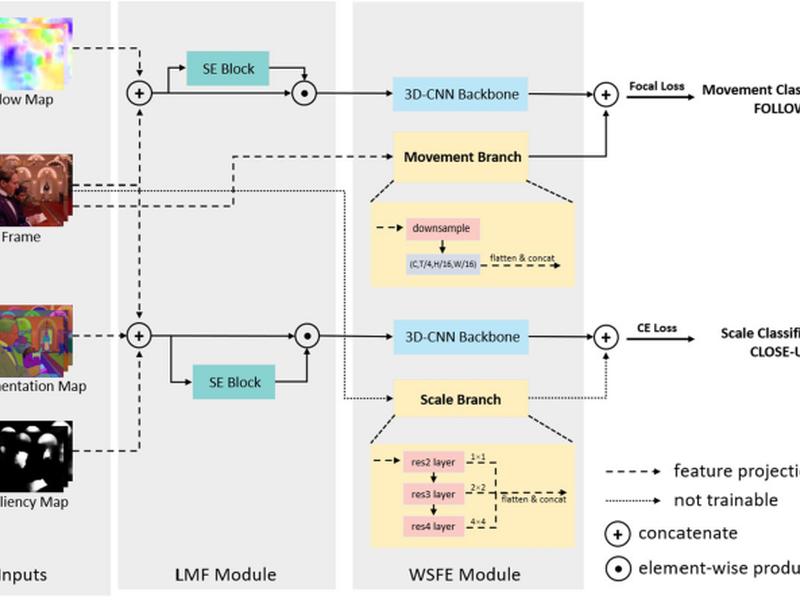

This paper explores the task of shot type classification in films, proposing that utilizing multimodal video inputs can effectively improve the accuracy of the task. To this end, the authors propose a Lightweight Weak Semantic Relevance Framework (LWSRNet) for classifying cinematographic shot types. The framework is tested on FullShots, a large film shot dataset containing 27K shots from 19 movies, and outperforms previous methods in terms of accuracy with fewer parameters and computations.