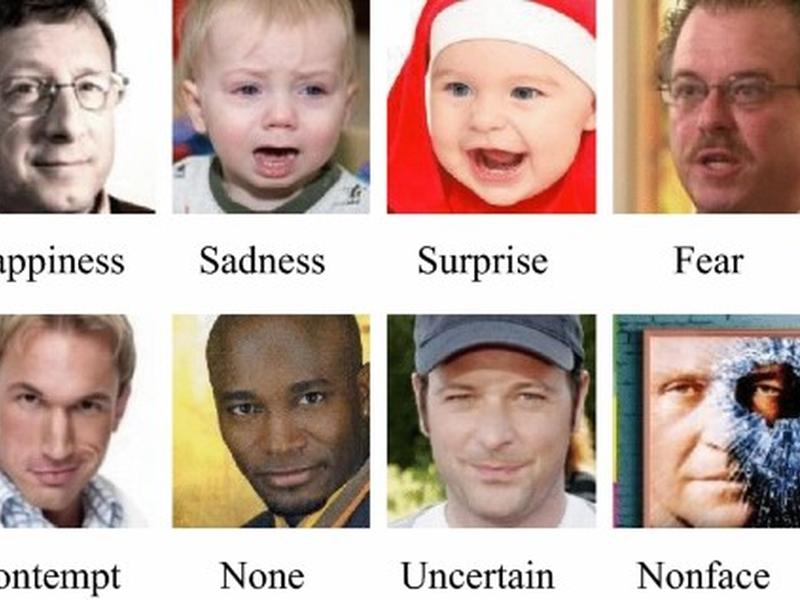

This study examines the application of deep neural networks (DNNs) for facial emotion recognition (FER). The combination of squeeze-and-excitation networks and residual neural networks were used for the task of FER. AffectNet and the Real-World Affective Faces Database (RAF-DB) were used as the facial expression databases for the CNN. The feature maps were extracted from the residual blocks for further analysis. The results of the study show that the features around the nose and mouth are critical facial landmarks for the neural networks. Cross-database validations were conducted between the databases, with the network model trained on AffectNet achieving 77.37% accuracy when validated on the RAF-DB.