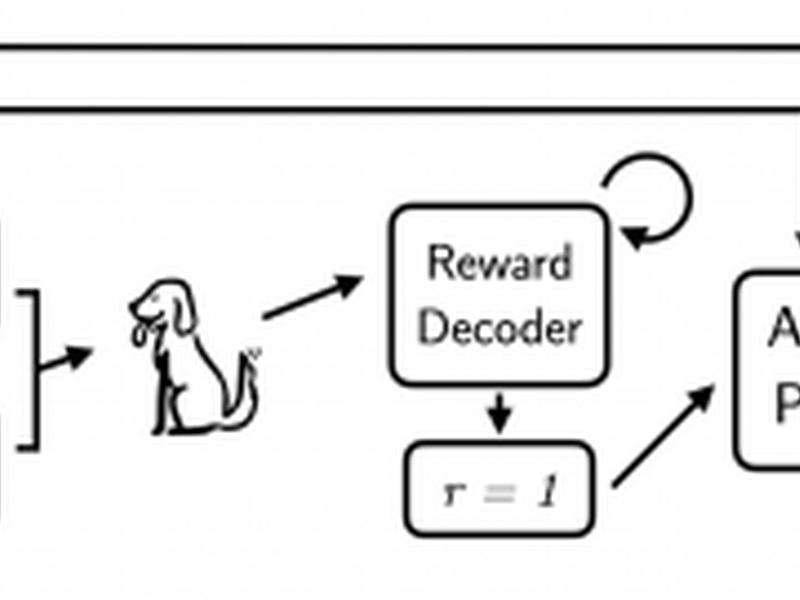

Interaction-Grounded Learning (IGL) is a new paradigm that allows agents to infer reward functions from arbitrary feedback signals instead of explicit numeric rewards. This is demonstrated through a toy problem of a new dog, Lassie, and the agent’s attempts to make her happy and comfortable. IGL requires two criteria to succeed: rare rewards and consistent communication. Rewards play a crucial role in reinforcement learning (RL) and can be learned through Reinforcement Learning with Human Feedback (RLHF) or IGL.