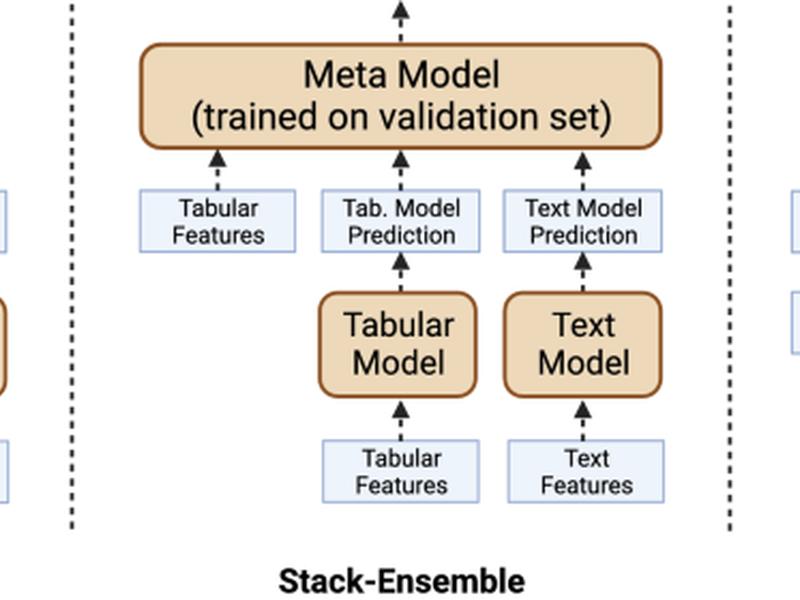

This article discusses the need for explainability in machine learning models used for clinical decision-making. The authors present a multimodal masking framework that extends the SHapley Additive exPlanations (SHAP) method to text and tabular datasets, specifically for identifying risk factors for companion animal mortality in veterinary electronic health records. The framework is designed to treat each modality consistently, allowing for more accurate and comprehensive explanations of model decisions. The authors also compare the performance of different multimodality approaches, with the best-performing method utilizing PetBERT, a language model pre-trained on a veterinary dataset. The investigation reveals the importance of free-text narratives in predicting animal mortality and highlights the potential benefits of additional pre-training for language models.