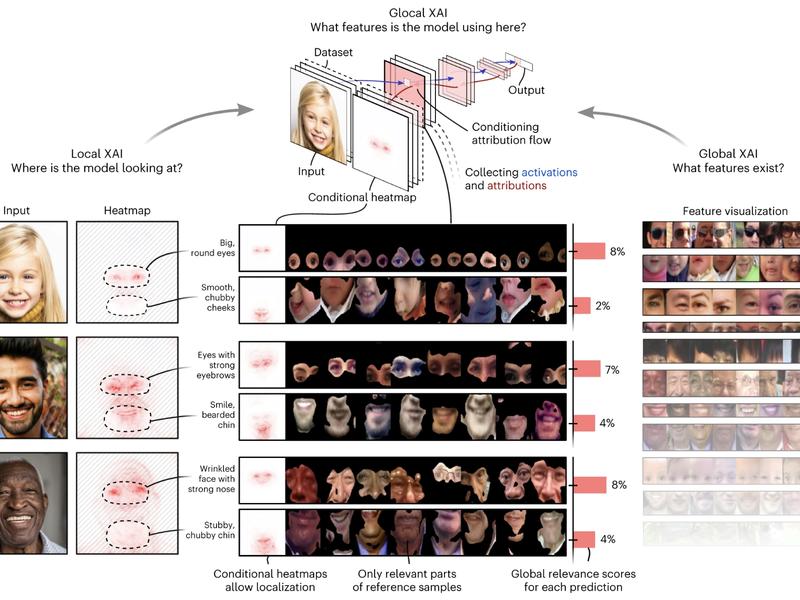

The field of Machine Learning and Artificial Intelligence has become increasingly important, with new advancements being made every day. However, it is difficult to understand how AI systems make their decisions due to the intricate and nonlinear nature of deep neural networks (DNNs). To address this issue, Prof. Thomas Wiegand, Prof. Wojciech Samek, and Dr. Sebastian Lapuschkin introduced the concept of relevance propagation (CRP). This method provides a pathway from attribution maps to human-understandable explanations, allowing for the elucidation of individual AI decisions through concepts understandable to humans. CRP integrates local and global perspectives, addressing the ‘where’ and ‘what’ questions about individual predictions. As a result, CRP describes decisions made by AI in terms that people can comprehend.