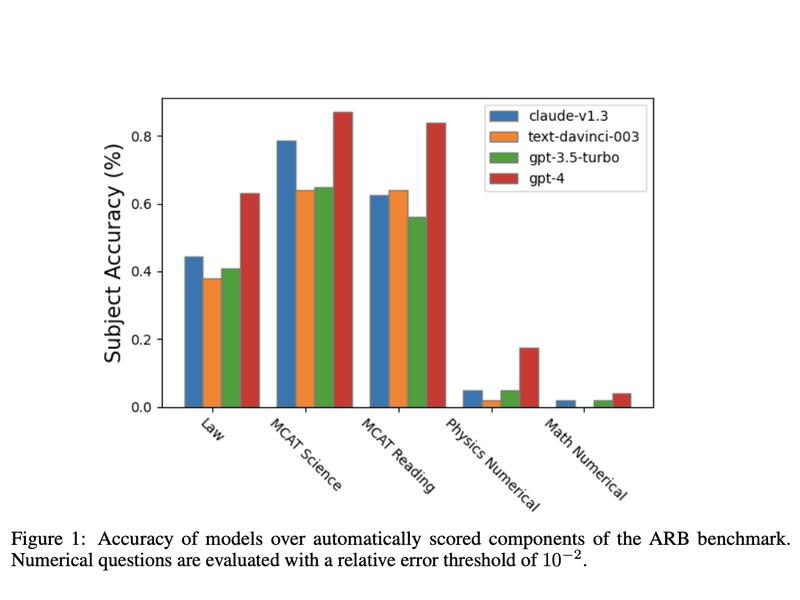

Natural Language Processing has seen significant advances in recent years, with the development of sophisticated language models such as GPT 3.5, GPT 4, BERT, and PaLM. To evaluate these developments, a number of benchmarks have been created, such as GLUE and SuperGLUE. However, these benchmarks are no longer challenging enough to assess the models’ capabilities. To address this, a team of researchers has proposed a new benchmark called ARB (Advanced Reasoning Benchmark). ARB focuses on complex reasoning problems in various subject areas, such as mathematics, physics, biology, chemistry, and law. The team has evaluated GPT-4 and Claude on the ARB benchmark, and the results show that the models are still far from being able to solve the complex problems posed by the benchmark.