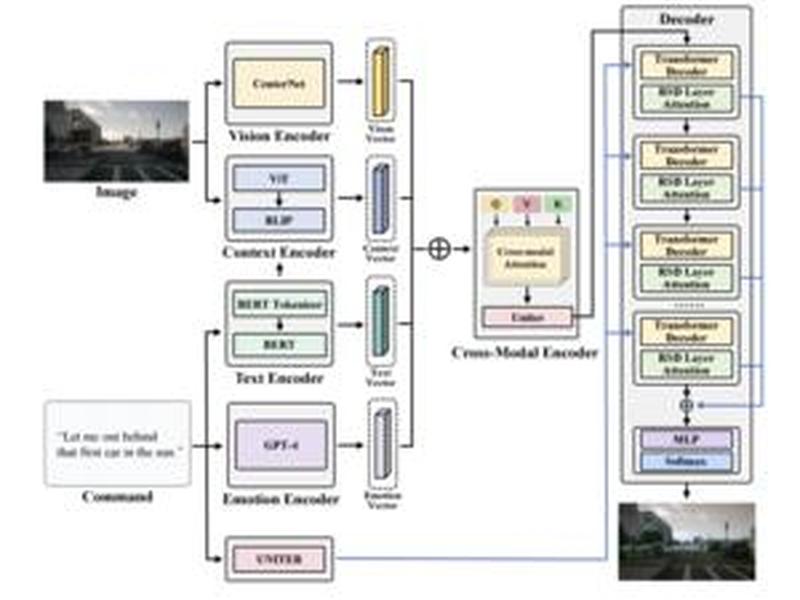

A team of researchers from the University of Macau has developed a Context-Aware Visual Grounding Model (CAVG) that integrates natural language processing with large language models to enable voice commands for autonomous vehicles. This model addresses the public’s cautious attitude towards fully entrusting control to AI systems and tackles the challenge of linking verbal instructions with real-world contexts. The team participated in the Talk2Car challenge, which tasks researchers with accurately identifying regions in front-view images based on textual descriptions.