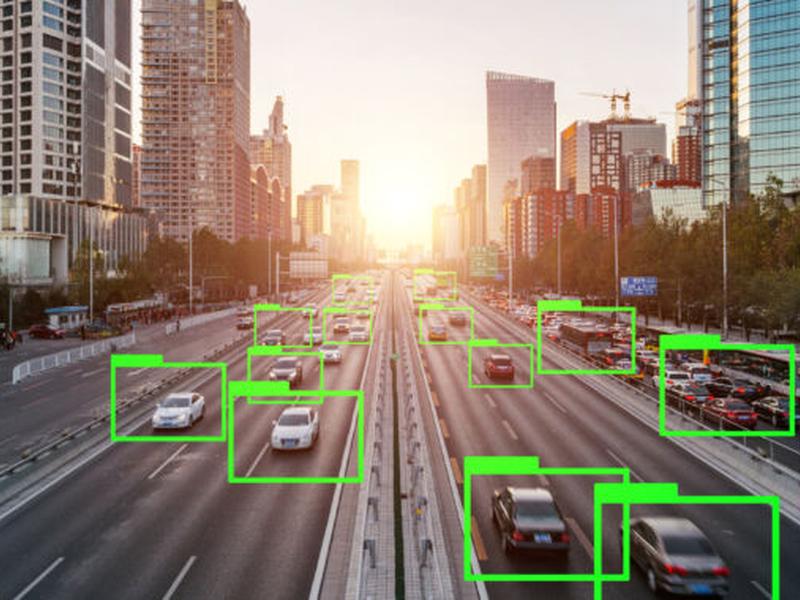

AI chatbots are being used in autonomous driving systems to help make moral decisions, but there are concerns about their readiness and ability to make nuanced decisions. Research has shown that chatbots and humans have similar priorities, but there are also clear deviations. The Trolley Problem, a classic moral dilemma, has been used to test chatbots’ decision-making abilities. However, more nuanced questions need to be asked to ensure that chatbots can make ethical decisions in real-life situations.