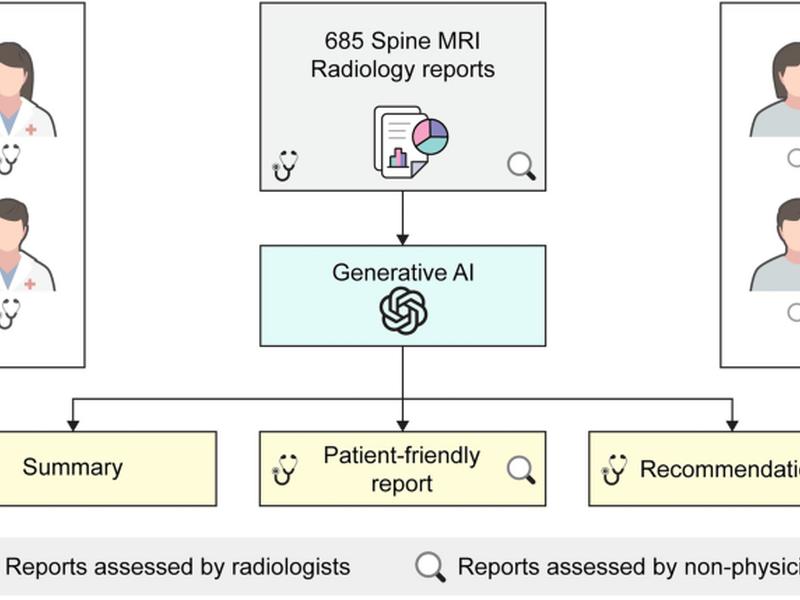

This study examines the use of AI-generated reports in medical imaging and identifies cases of artificial hallucinations with clinically significant implications. The study found that these hallucinations were more common in patient-friendly reports and often involved inaccurate explanations of medical terminology. Effective communication is essential in patient-centered healthcare and this study highlights the importance of accurate and clear language in radiologic reports.