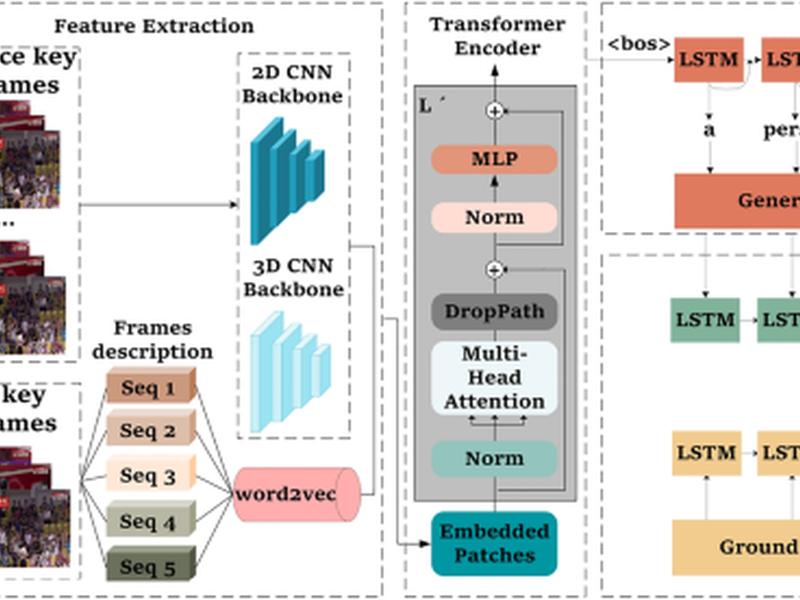

This article discusses the progress of image and video captioning, focusing on the encoder-decoder framework, which is widely used in image captioning based on deep learning. Specifically, CNNs are used to encode image features, and RNNs are used to extract image features and generate text descriptions. Recent research on graphical multimodality has made great progress, such as introducing the TF-IDF model to weight words and replacing the RNN in the m-RNN model with a long short-term memory (LSTM) network.

Previous ArticleLarge-scale Capture Of Hidden Fluorescent Labels For Training Generalizable Markerless Motion Capture Models

Next Article Half Year 2023 Xiao-i Corp Earnings Call